The Fathers of Modern Technology: 6 Men Whose Innovations Shape Our World

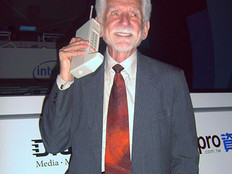

In 2012, BizTech published “Fathers of Technology: 10 Men Who Invented and Innovated in Tech,” to highlight the indelible contributions of men who invented some of the foundational technologies we use today, including Douglas Engelbart (the computer mouse), Marty Cooper (the cellphone) and Tim Berners-Lee (the World Wide Web).

Technologies often share more than one creator (Apple, for example, listed 14 people on its patent filing for the original iPhone). However, some inventors and innovators stand out as singular forces behind the creation of new technologies, or as key contributors to their development, without whom progress would not have been possible.

With that in mind, and in honor of Father’s Day, here is an updated version of the “Fathers of Technology.” Just as we recently did with “The New Mothers of Technology: 6 Women Who Have Led in Tech Since 2010,” we are highlighting tech pioneers whose inventions have spawned new technologies or taken the world by storm in recent years.

While this list in certainly not exhaustive (and please let us know in the comments who we missed), here’s a toast to the fathers of modern technology!

SIGN UP: Get more news from the BizTech newsletter in your inbox every two weeks!

Andy Rubin

Smartphone OS Pioneer

His impact on technology:

His impact on technology:

The smartphone world (and the Internet of Things) would be far different today were it not for the Android platform. However, it was not a certainty that Android would ever succeed — or even be a mobile operating system.

Android, the company, was founded in October 2003 by Rubin, Rich Miner, Nick Sears and Chris White. Rubin said in 2013 that the tinkerers originally designed an open operating system based on the Linux kernel for “smart cameras” that would connect to PCs, according to IDG News Service. However, in a 2003 interview with Bloomberg BusinessWeek, two months before incorporating Android, “Rubin said there was tremendous potential in developing smarter mobile devices that are more aware of its owner's location and preferences.” After about five months, Android switched gears and started to develop an open-source solution for handset software.

Google bought Android in 2005, and for more than two years a large group of engineers led by Rubin worked to develop the open-source Android OS for smartphones. In November 2007, Google announced the creation of the Open Handset Alliance, designed to promote phones running on Android. The first Android phone, the T-Mobile G1 (built by HTC), debuted in September 2008. The operating system incorporated Google’s core services, including search, Gmail and Maps, and has evolved over time to support mobile payments, augmented reality and many other technologies.

It took a while for Android to gain steam (Gartner reported that Android had just a 3.9 percent share of the global smartphone market at the end of 2009).

However, as Samsung, LG Electronics, Sony, Motorola, HTC and many others embraced Android to deliver alternatives to the iPhone — and as Nokia and BlackBerry struggled — Android’s global smartphone market share swelled rapidly (to nearly 70 percent at the end of 2012 and then nearly 82 percent at the end of 2016). Rubin also pushed Android into tablets starting in 2010 before stepping down from his role as the head of Android in 2013 to focus on robotics.

Sundar Pichai, who succeeded Rubin and eventually became CEO of Google, said in May at the Google I/O developer conference that there are now 2 billion monthly active users on the Android platform, which, as The Verge notes, includes “smartphones, tablets, Android Wear devices, Android TVs, and any number of other gadgets that are based on the operating system.”

Where is he now?

Rubin left Google in the fall of 2014, and a few months later, as Wired notes, he started Playground Global, a technology incubator and investment fund for hardware startups that provides them with money, advice and crucial engineering talent. Rubin has also founded a consumer hardware company called Essential, which unveiled a smartphone and digital home assistant akin to Amazon Echo or Google Home. The phone will be sold exclusively by Sprint, but Essential has big ambitions and has reportedly raised $300 million so far.

Martin Casado

Networking Dynamo

His impact on technology:

His impact on technology:

Software-defined networking is one the most significant developments in networking technology. SDN represents a new way to architecting networks, in that it separates the network control plane from the data plane, enabling abstraction of resources and programmable control. Software at the control layer offers IT staff the ability to automate manual processes. Instead of linking network functions and policies to hardware, SDN allows IT to control those tasks through software. The result is open, interoperable networks that can be dynamically adjusted.

Much of SDN can be traced back to the work of Martin Casado, a Spanish scientist, who, while working on his Ph.D. at Stanford University, laid the foundation for the OpenFlow standard. “SDN and OpenFlow came out of work we were doing at Stanford,” Casado told Enterprise Networking Planet in 2013. OpenFlow is the protocol that catalyzed the development of SDN.

How does OpenFlow work?

“In a classical router or switch, the fast packet forwarding (data path) and the high level routing decisions (control path) occur on the same device,” OpenFlow.org notes. “An OpenFlow Switch separates these two functions. The data path portion still resides on the switch, while high-level routing decisions are moved to a separate controller, typically a standard server.”

In 2007, Casado co-founded Nicira, a network virtualization company, along with Nick McKeown and Scott Shenker, who had advised Casado’s doctoral work. Nicira was one of the original developers of Open vSwitch technology, which enables a virtual switch to run inside of software, Enterprise Networking Planet notes.

In 2011, McKeown and Shenker co-founded the Open Networking Foundation to transfer control of OpenFlow to a nonprofit organization. A little more than a year later, in July 2013, VMware acquired Nicira for $1.26 billion. Casado joined VMware, which allowed SDN to flourish. “My goal in creating Nicira was to change networking,” Casado told Enterprise Networking Planet. “It’s very difficult to do that alone, and now I'm working with the best company in the world to make it happen.”

By the time he left in February 2016 he was executive vice president and general manager of VMware’s networking and security business.

According to IDC, the worldwide SDN market — comprising physical network infrastructure, virtualization/control software, SDN applications (including network and security services) and professional services — will be worth nearly $12.5 billion in 2020.

Where is he now?

Casado is now a general partner at venture capital firm Andreessen Horowitz, which had invested in Nicira. He told Business Insider in 2016 that he was excited to help find and advise promising startups that can sell directly to developers: “It’s why I got into venture capital.”

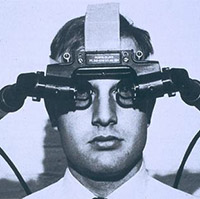

Ivan Sutherland

Graphical Godfather

His impact on technology:

His impact on technology:

Virtual reality and augmented reality have been two of the hottest technologies in recent years. VR produces a computer-generated, immersive reality that users can interact with (usually via a headset). AR involves bringing digital information into a user’s field of view, overlaid onto the real world, which the user observes through a headset or on a mobile device.

Apple is starting to invest heavily in AR; Oculus, Samsung, HTC and Microsoft are major investors in VR. All of them owe a debt of gratitude to Ivan Sutherland.

Although many have contributed to the development of the technologies over time, Sutherland is widely regarded as the pioneer of graphical user interfaces.

According to Portland State University, in 2012, when the Inamori Foundation gave Sutherland the Kyoto Prize, Japan’s highest private award for global achievement, it noted his early work developing Sketchpad, “a graphical interface program that allowed the user to directly manipulate figures on a screen through a pointing device,” in 1963.

In their book, Understanding Virtual Reality: Interface, Application, and Design, William Sherman and Alan Craig note that Sutherland, then a Massachusetts Institute of Technology doctoral student, used a “light pen to perform selection and drawing interaction, in addition to keyboard input.”

In 1965, Sutherland explained his concept of ultimate display in a presentation to the International Federation for Information Processing Congress. He explained, Sherman and Craig note, “the concept of a display in which the user can interact with objects in some world that does not need to follow the laws of physical reality: ‘It is a looking glass into a mathematical wonderland.’”

The big breakthrough came in 1968, when Sutherland (then at Harvard University), along with student Bob Sproull, came up with what Sherman and Craig describe as a “stereoscopic head-mounted display,” which used “miniature cathode ray tubes, similar to a television picture tube, with optics to present separate images to each eye and an interface to mechanical and ultrasonic trackers.”

The VR display was so heavy that it needed to be suspended from the ceiling, earning the nickname “The Sword of Damocles.” It demonstrated crude graphics for users that included “a stick representation of a cyclohexane molecule and a simple cubic room with directional headings on each wall,” Sherman and Craig note.

Many others followed in Sutherland’s footsteps, including Boeing researcher Thomas Caudell, who coined the term “augmented reality” in 1990. But much of the pioneering graphics and VR work that today’s VR and AR devices and software are built on can be traced to Sutherland.

Where is he now?

Sutherland is a scientist at Portland State’s Maseeh College of Engineering and Computer Science. He also leads the Asynchronous Research Center at Portland State.

Alon Cohen

VoIP Visionary

His impact on technology:

His impact on technology:

Today, users of a multitude of mobile and desktop applications regularly take advantage of Voice over Internet Protocol technology. Many popular communication apps, from Cisco Systems’ WebEx to Skype, trace their lineage back to technology pioneered by Alon Cohen.

VoIP uses IP to transmit real-time voice communications, and its foundation dates back several decades (voice packets were sent over ARPANET in the 1970s, notes the website Get VoIP).

“The original idea behind VoIP was to transmit real-time speech signal over a data network and to reduce the cost” for long-distance calls, because VoIP calls would go over packet-based networks at a flat rate, instead of the more expensive public switched telephone network, as noted in the book Guide to Voice and Video over IP: For Fixed and Mobile Networks, by Lingfen Sun, Is-Haka Mkwawa, Emmanuel Jammeh and Emmanuel Ifeachor.

The authors note that Cohen and Lior Haramaty invented commercial VoIP in 1995. The pair, both from Israel, founded the company VocalTec Communications in 1989.

Cohen’s patent application for VoIP, filed in November 1994 (and granted in 1998), describes an “apparatus for providing real-time or near real-time communication of audio signals via a data network.” The patent describes a way to “provide an audio transceiver between a personal computer (PC) and a packet data network.”

VocalTec released the first “Internet Phone” in February 1995, note authors Sun, Mkwawa, Jammeh and Ifeachor. Since then, they add, VoIP “has grown exponentially, from a small-scale lab-based application to today’s global tool with applications in most areas of business and daily life.”

VoIP can be found in everything from Apple’s FaceTime service to Voice over LTE high-definition calling and is the modern way much of the world communicates via voice.

Where is he now?

Cohen is the executive vice president and chief technology officer of Phone.com, which offers virtual phone service via a self-service cloud communications platform for entrepreneurs and small companies.

John McCarthy

AI Architect

His impact on technology:

His impact on technology:

It’s arguable that artificial intelligence is the biggest technological breakthrough yet, and many argue that we have not even achieved true AI, but merely machines that are starting to approach human intelligence in some ways.

AI will certainly continue to evolve in the decades to come, and while it has many fathers (British scientists Alan Turing and Christopher Strachey, and Americans Arthur Samuel, Marvin Minsky, Allen Newell and Herbert Simon were all early pioneers), John McCarthy holds a solid claim to being the father of AI.

In 1956, McCarthy was one of the organizers of the Dartmouth Summer Research Project on Artificial Intelligence, one of the underpinnings of which was the idea that “every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it.”

McCarthy persuaded the conference to accept “artificial intelligence” as the name of the field the attendees were studying. “I came up with the name when I had to write the proposal to get research support for the conference from the Rockefeller Foundation,” he told CNET in 2006. “And to tell you the truth, the reason for the name is, I was thinking about the participants rather than the funder.” The conference gave AI its name but also its mission and first major success: The program Logic Theorist — the first program specifically designed to imitate a human’s problem-solving skills — debuted at the conference.

In 1958, after McCarthy moved from Dartmouth College to the Massachusetts Institute of Technology, he developed the LISP programming language, which, Wired notes, “became the standard programming language of the artificial intelligence community, but would also permeate the computing world at large.”

LISP, which has evolved over time, is used not only in “robotics and other scientific applications but in a plethora of internet-based services, from credit-card fraud detection to airline scheduling” and also paved the way for voice recognition technology, the Independent notes.

In 1962, McCarthy became a full professor at Stanford University, where he remained until his retirement in 2000.

Where is he now?

McCarthy died on Oct. 24, 2011. He was widely lauded at the time of his death, and he continues to be remembered fondly by Stanford. Whitfield Diffie, an internet security expert who worked as a researcher for McCarthy at the Stanford Artificial Intelligence Laboratory told The New York Times when McCarthy died that, in the study of AI, “no one is more influential than John.”

J.C.R. Licklider

Cloud Prophet

His impact on technology:

His impact on technology:

Although cloud computing is now a dominant force in the IT world, the concept has been around for decades.

As Mashable notes: “In the 1950s, ‘time-sharing’ — what is now considered the underlying concept of cloud computing — was used in academia and large corporations. Several clients needed to access information on separate terminals, but the mainframe technology was costly. To save money, they needed to find a way for multiple users to share CPU time. From there, it was just a hop, step and a jump away to the cloud of today.”

Joseph Carl Robnett Licklider is a name not many may know, but he developed a lot of the concepts that would later blossom into cloud computing.

In the 1940s and 1950s, Licklider was a lecturer at Harvard, a researcher at its Psycho-Acoustics Laboratory, and then an associate professor at MIT. In 1957 he joined the engineering firm of Bolt Beranek & Newman as vice president in charge of research in psychoacoustics, engineering psychology and information systems. While at BBN, he came up with his earliest ideas for a global computer network in a series of memos discussing an “Intergalactic Computer Network,” the Internet Hall of Fame notes.

In 1962, he joined the Defense Department’s Advanced Research Projects Agency. The Internet Hall of Fame, in its induction citation for Licklider, notes that it was the “persuasive and detailed description of the challenges to establishing a time-sharing network of computers that ultimately led to the creation of the ARPANET,” the precursor to the internet.

“His vision was for everyone on the globe to be interconnected and accessing programs and data at any site, from anywhere, explained Margaret Lewis, product marketing director at AMD,” Computer Weekly reports.

“It is a vision that sounds a lot like what we are calling cloud computing,” Lewis told Computer Weekly.

It wasn’t until the late 1990s and early 2000s that broadband connections allowed for the realization of Licklider’s vision.

Where is he now?

Licklider died on June 26, 1990. His obituary in The New York Times noted that he was “credited with pioneering work that established the basis for concepts like time sharing and resource sharing.”