Biotech Companies See ROI in High-Performance Computing Systems

Accelerate Diagnostics is deep into the development of BACcel, a multifunction instrument for expediting and improving the way medical facilities identify and treat bacterial infections.

The diagnostic system will incorporate a digital microscope to capture time-lapse images of live samples taken directly from patients, automated image analysis software and high-performance computing (HPC) capabilities. Combined, these core components will let lab clinicians rapidly identify pathogens and determine susceptibility to available antibiotics. Traditional methods require 24 to 48 hours; Accelerate's system can do it in six hours or less.

Currently, the Tucson, Ariz., biotech company is developing the first turnkey prototype to place in hospital labs for clinical trials. These instruments will leverage testing algorithms, currently under development, that require processing and analysis of large image sets. To handle this computing element, Accelerate needed a purpose-built server capable of rapid image processing.

"We're not a big-enough shop to be in the business of building HPC machines," says Paul Richards, software engineer and head of IT at Accelerate. "We needed something we could bring into our lab, flip on and basically have work out of the box."

So in early 2013, it turned to PSSC Labs, which integrated an NVIDIA Tesla K10 GPU accelerator, Intel motherboard with Xeon E5 processors, memory, network interface and high-speed storage to deliver a server customized to meet Accelerate's needs.

The system performed so well that the company ordered three more Tesla-based servers a few weeks later to create its own HPC cluster. The cluster has helped the company ramp up development and move more quickly toward clinical trials, FDA filing and commercial release.

"We're able to rip through thousands of images with these systems. We then take, say, 40 gigabytes' worth of sample data and analyze it using our proprietary software, and — in about 15 minutes — get the diagnostic answers we need," Richards says.

Compute to Compete

Once primarily the province of large government and academic research projects, HPC systems have made deep inroads into the commercial space. In fact, private-sector use now accounts for most of the market's growth.

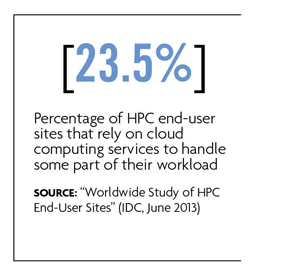

Encouraged by new lower-priced packaged and cloud-based options, a growing number of businesses are acknowledging "to compete, you must compute," turning to HPC systems to supercharge modeling, simulation and data analysis. In turn, they're compressing research timeframes, speeding time to market as well as improving the performance of financial services, computer-aided engineering and many other applications.

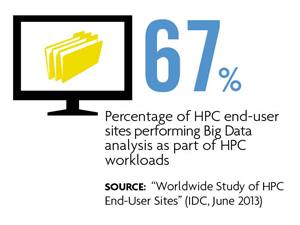

Growing HPC adoption rates among SMBs coincides with the increased use of accelerators and co-processors to improve system performance and lower power consumption. IDC research shows the proportion of sites using co-processors in HPC systems jumped from 28.2 percent in 2011 to 76.9 percent in 2013.

Photo: Steve Craft

"We're able to rip through thousands of images with these systems. We then take, say, 40 gigabytes' worth of sample data and analyze it using our proprietary software, and — in about 15 minutes — get the diagnostic answers we need," Richards says.

The Big Data push also has driven up use. "The HPC community is the original home of Big Data; it's really been doing Big Data forever," says Steve Conway, research vice president at IDC.

The traditional focus has been data-intensive modeling and simulation. Now, small and midsized businesses developing and deploying products that incorporate high-end analytics methodologies, such as graph analytics, are adopting HPC servers because they can't run complex analytics problems without muscular processing capabilities. "A slew of companies — many of them startups and SMBs — are pushing into the HPC space primarily to do complicated analytics in near real time," Conway says.

Got HPC?

The commoditization of technologies used within HPC systems puts them within reach of SMBs, says James Lowey, vice president of technology at the Translational Genomics Research Institute (TGen), where he's been involved in the deployment of three supercomputers since 2003. Processing genomics data creates unique technology demands for the Phoenix nonprofit, but Lowey says some SMBs can take advantage of packaged HPC systems priced for entry-level adoption.

"You can get 512 cores, storage and fast networking capabilities in fully integrated systems," Lowey says. "They're available in a single rack that you essentially plug in and start using. You just need to install the software you want them to run."

And cost is not an entry barrier, he adds. "It used to cost $20 million to $30 million to buy one of these machines; now, they start at under $10,000."

For its part, startup Kela Medical of Ontario is taking advantage of cloud-based HPC through the Southern Ontario Smart Computing Innovation Platform (SOSCIP), an initiative overseen by Ontario Centres of Excellence (OCE), in partnership with IBM and seven Ontario universities. The program helps qualified SMBs accelerate development and commercialization of products for health, smart infrastructure and agile-computing apps. SOSCIP provides Kela with access to IBM's cloud-computing infrastructure, Cognos business intelligence, and SPSS predictive analytics products and storage space.

Kela is in the early modeling stage of developing its pHR Card System, a smart card that incorporates a flash-memory chip capable of storing a patient's entire personal health record and that syncs with data in healthcare management systems. HPC is instrumental in the modeling, simulation and analytical work required to ensure pHR Card Systems can manage dynamic health records, including diagnostic images, tailored to individual patient profiles.

The intended outcome, says Kela CTO Daniel Sin, is a patient-centric system that lets patients and providers collaborate to improve an individual's care.

"We need HPC to help us determine how to take decades' worth of a patient's medical records, captured in multiple data formats, and present them in an intuitive, unified way," Sin says. "Without SOSCIP, we'd never have access to these capabilities or even be able to discover what's possible with medical records."

Considerations for Adoption

Before embarking on an HPC program, a company first should ensure that its processing workload justifies the capital expense, TGen's Lowey says. He also advises "finding a trustworthy partner to help, especially if you don't have the in-house expertise to build this kind of system."

Data storage demand is another important consideration, adds Lowey, whose organization houses a petabyte of genomics data — data it must keep because it can impact medical treatment years after collection. On average, TGen's genomics has doubled annually for the past three years, a trend Lowey expects to continue. SMBs required to store such large volumes of data, he says, must find ways to make it accessible in formats that support mining and analytics.

Accelerate also faces significant storage challenges. "We're generating tremendous amounts of data with our microscopic images, adding a terabyte to our storage facility daily," Richards says. "Since early 2013, we've created around 100 million files worth of bacterial images and associated metadata."

Ultimately, however, the value of HPC is in the processing capabilities and functionalities it provides Accelerate Diagnostics' product teams, Richards notes. "If HPC is suited to your application, you can do crazy stuff with it — that's why we're exploiting it."