These risks typically show up in five ways:

- Code generation: AI may write insecure or incomplete scripts when assisting with automation, inadvertently introducing new vulnerabilities into systems.

- Threat validation: When AI is used to assist with investigating alerts, it may overlook key indicators of compromise, causing defenders to miss active threats.

- Detection logic: AI can help write rules and detection content, but if its assumptions are wrong, critical threats may go unnoticed.

- Remediation planning: AI-generated remediation suggestions might not account for the real-time system state, leading to ineffective or even harmful changes.

- Prioritization and triage: AI may misrank threats, causing a focus on lower-priority issues while more serious risks slip by.

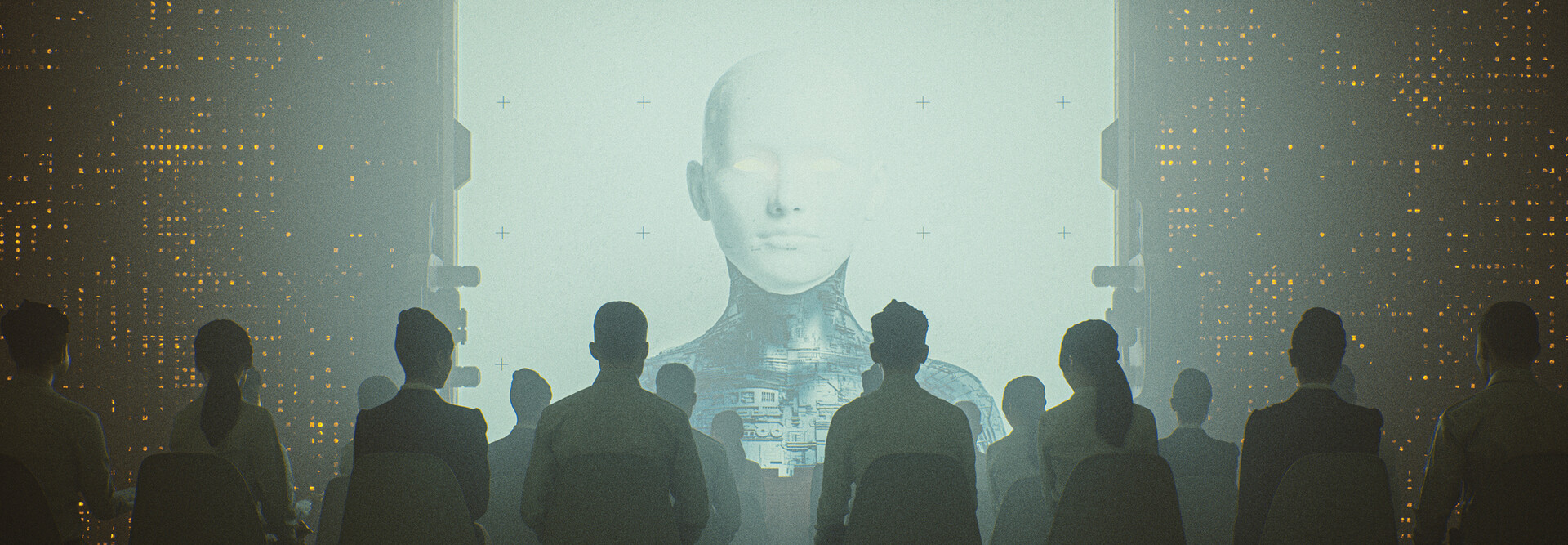

Many teams begin to trust AI blindly after it gets things right a few times — until it doesn’t. The key is to view AI as a collaborator, not a delegate.

RELATED: Speed up issue resolution with full stack observability.

How Security Teams Can Combat AI Hallucinations

Minimizing these risks begins with human validation and leveraging models with built-in reasoning.

Just as financial services organizations are wise to keep humans in the loop with other types of AI-generated recommendations, such as making credit decisions, a human analyst should review any AI recommendation before it’s deployed. When it comes to endpoints, organizations should validate them, as well, prior to acting on AI-generated suggestions. If AI recommends upgrading Chrome, a person should first confirm that an upgrade is appropriate. This constant validation loop helps prevent cascading errors from bad assumptions.

Beyond architecture, user education plays a huge role. Teams must learn to recognize when an AI result looks “off.” That instinct to pause and question — even when a tool has been reliable in the past — must be preserved. One tactic we’ve found effective is refining the user interface of our threat detection solutions to highlight the most critical data points so that the human eye is drawn to what matters most, not just what AI emphasizes.

LEARN MORE: Elevate your cybersecurity with CDW services.

Reducing background noise is also important. Many AI misfires are compounded by environments overwhelmed with alerts due to poor hygiene, including unpatched systems and misconfigurations. Cleaning up that noise makes it easier for both humans and machines to focus on what’s truly urgent.

Ultimately, AI will continue to transform security operations. But in this moment, the stakes are simply too high to trust it without question. Security professionals must understand the models behind their tools, the data those tools are trained on and the architectural assumptions they make. AI is a powerful collaborator, but only if we keep humans in the loop.